Creative writing and AI’s failure modes

In which I fall down a rabbit hole with a purported “AI novel writer,” and wonder what the result tells us about generative AI’s future.

I spent nearly six years as a technical writer at an AI company, and it was one of the best jobs I’ve ever had. I worked with some brilliant people, several of whom have gone on to found other AI-focused companies.

The AI that’s taken off in the last few years is not what we were working on there, though. It’s specifically generative AI, a variant based on machine learning and neural networks. And “taken off” is an understatement; I haven’t seen anything like this since the original dotcom boom. You may recall that the original dotcom boom ended in a spectacular dotcom crash. While I am not saying that this is foreshadowing, if you can read about Logitech adding an AI prompt builder to their mice and not think of a certain sock puppet, you are more starry-eyed than I am.

As someone who’s been a professional technical writer and at least nominally a professional fiction writer, I’m caught between two opposing viewpoints. I watch folks in tech circles—including many who are indie creators, of writing, podcasts, software, whatever—using generative AI without any seeming concern over the ethical issues. And, almost every tech blogger/podcaster I follow seems to take it as a given that GenAI is here to stay and is obviously the future of everything. I understand the enthusiasm, but I also find the handwaving past significant problems with GenAI output, ranging from copyright violations to unreliable outputs (“hallucinations”) to be frustrating.

On the flip side, many creators I know get instantly prickly if I admit even slight enthusiasm for any of this stuff, let alone suggest that there could ever possibly be value in generative ML. They don’t want to hear about how cool it is even in the most limited contexts. As far as they’re concerned, this is the second coming of crypto, a solution in search of a problem, a scam pushed by techbros who neither understand nor like creative work. I’m sympathetic to this take, but it’s also frustrating. It sweeps everything “AI” up into what should be a condemnation specifically of generative AI, and it dismisses any possibility that GenAI has value now—or could ever have value if we can work out those concerns. (That’s a big “if,” which I’ll come back to at the end.)

Authors might love a system that could keep a local index of the three volumes of the fantasy epic they’re on volume three of. When they try to remember what town they said Brenda the Elf Maiden was from, instead of searching for the word “Brenda” through four hundred thousand words of text, they could literally type “What town is Brenda from” and get the answer. And as anodyne as ChatGPT’s outputs tend to be, I can imagine using it as a brainstorming partner, prompting it with things like “list a few themes in a myth about a woman given the power of a weather god,” or using it to analyze your story through different narrative theories, suggesting improvements.

But AI boosters—developers, pundits, genuine enthusiasts, and techbros looking for the Next Big Thing—don’t want to stop there. They want it to actually write stories, to take the mere kernel of an idea and spit out a whole screenplay or novel, maybe even the cover art. If they get their way, AI will soon free us from the drudgery of (checks notes) creative pursuits that give our lives meaning! Cool. Cool cool cool.

Seriously, though, looking at how well LLMs do at such a task tells us more than whether AI is creative. “Good” fiction is subjective, but competent fiction involves defining a structure, formulating a plan, and executing that plan to produce long-form output. This describes more than writing novels: most of the more fantastic predictions about what AI can and will do for us (or to us) requires similar skills.

My thesis has been that LLMs will suck at such complex tasks because of they actually are at their heart: predictive lexical analysis engines. Yet, even the “old” ChatGPT 3.5 can confound my expectations. Recently, I prompted it to describe the seven-point story structure to me, which it did, and then in the same session prompted,

Using the seven-point story structure, write a short synopsis of a story about a 1920s private detective hired by a wife to find evidence her husband is cheating on her. The detective discovers the husband is being blackmailed, and this leads the detective to uncover a smuggling operation run by gangsters.

It responded:

Hook: In the bustling streets of 1920s Chicago, private detective Jack Malone is hired by a distraught wife to uncover the truth about her husband’s infidelity.

Plot Point 1: Jack begins his investigation and quickly discovers that the husband, Thomas, is not cheating on his wife, but is instead being blackmailed by an unknown individual.

Pinch Point 1: Jack’s efforts to uncover the blackmailer lead him to a seedy underground gambling den, where he narrowly escapes a dangerous confrontation with a group of thugs.

Midpoint: Jack stumbles upon evidence that the blackmail scheme is connected to a larger smuggling operation run by notorious gangsters in the city.

Pinch Point 2: As Jack delves deeper into the criminal underworld, he becomes a target himself, with the gangsters determined to silence him before he can expose their illegal activities.

Plot Point 2: In a thrilling showdown at a hidden warehouse on the outskirts of town, Jack confronts the gangsters and uncovers the full extent of their smuggling operation, ultimately bringing them to justice.

Resolution: With the truth revealed and the criminals behind bars, Jack delivers the evidence to Thomas’s wife, who is grateful for his help in not only saving her marriage but also bringing down a dangerous criminal enterprise. Jack walks away from the case with a renewed sense of purpose and a reputation as one of the city’s most skilled and fearless detectives.

Okay. “Jack Malone” is the detective Anthony LaPaglia played in the long-running crime drama Without a Trace, but setting that aside, this is impressive work at first glance, isn’t it? It knew what the seven-point structure was and applied it to my prompt to come up with a perfectly credible outline. Isn’t this showing both reasoning ability and a touch of creativity?

Wellllll…

Again, LLMs respond to prompts based on statistical probabilities. There’s a sense in which they’re reasoning about the text, but that’s not the same as understanding the text. This is where AI boosters leap into smugly say, “Maybe that’s the way human brains function, too, did you ever consider that?” (And yet, you participate in a society! Curious.) But the unique failure modes of machine learning tells us that’s not, in fact, the way human brains function. An image classifier may correctly identify a picture of a dog 99.99% of the time, but once in a blue moon it’ll tell you a Mondrian painting is definitely a labradoodle. However they’ve taught themselves to identify dogs, they haven’t done it the way humans do.

They don’t teach themselves to generate text or images the same way, either. Even setting aside the fingers thing, floor patterns change, people subtly merge into chairs or keyboards, straight lines in the background don’t quite match on different sides of a foreground object. Stable Diffusion, Midjourney, and friends “know” how to correlate the text in your prompt with images in your database and blend them together in an often magical-seeming way. That’s not the same as knowing what floors and chairs and people are, though, and how backgrounds and lighting and perspective works. The same is true for text, sometimes in obvious ways (factual errors, failing at elementary school level logic puzzles), and sometimes in subtle ones.

In our outline’s case, the LLM has countless examples of both detective stories and seven-point story analyses in its corpus, and computed the lexical equivalent of averaging the prompt and the structures together. You’d expect what you get out should definitionally be average, and that’s precisely what we got: every time ChatGPT expanded on the prompt, it did so in the most generic way possible. The only details it gave were (plagiarized) names. Pinch Point 2 isn’t the “all is lost” moment it should be—it’s a description of how literally every detective-versus-the-mob story works. How does Jack get from confronting the gangsters to bringing them to justice? What evidence did he find? What’s the connection between the gambling den and the smugglers? And what’s with the implication in that pinnacle-of-absolute-median resolution that Jack had to renew his sense of purpose? Come on, he’s goddamn Anthony LaPaglia!

Another run-through with the same prompt might produce a better outline, one that hints at higher stakes and specific dangers and less hand-wavy resolutions—but it might produce a worse one, too. This is why some detractors dismiss this as “spicy autocomplete”; I think a better description is “galaxy-brain level Mad Libs.” Tweaking the prompt might help, but you’d be better off using the generic outline from the first run as a jumping-off point, filling in your own details, raising the stakes, eliminating copyright violations, and so on.

Now, that right there—the generated output as a jumping-off point—is arguably a solid case for using LLMs in creative writing: they can get you started, get you past writer’s block, spit out ideas that might prove helpful even when the ideas themselves aren’t that great. An LLM trained, perhaps with retrieval-augmented generation, to more directly apply narrative theory could be even better. Subtxt with Muse does this with the Dramatica Theory of Story, and while I haven’t used it myself, the actual-use examples on its website are very impressive. Of course, you could also take your prompt through brainstorming exercises manually, or with a non-ML program like the original Dramatica Story Expert. Story Expert will probably not run on your Mac if it was bought in the last decade. The developers spent two decades before that doing the bare minimum to keep their codebase running on modern hardware (seriously, the thing somehow never had retina fonts!), and are now buried under a mountain of technical debt. But I digress.

Meet Claude

But, GPT-3.5/4 isn’t the only LLM in town. Many say Anthropic’s Claude is more “creative” (as squishily defined as that may be), and AI enthusiast/CEO Matt Shumer has created a script that uses Claude to write an entire novel. As he put it on Twitter/X,

Claude-Author is the first AI system that actually produces readable books. Still not perfect, but it’s a leaps and bounds improvement over previous approaches.

When someone asked, “Are the books any good, though? Thoughtful twists or exciting plots,” he responded, “Shockingly good.”

Is it true? Can Claude show adequate reasoning and creativity—or convincingly simulate both of them—well enough to write an imperfect but still shockingly good book?

A disclaimer

While I used generative machine learning for testing purposes here—to put the claim that there’s a there there under a microscope—I want to recognize up front that’s not a “Get Out of Ethical Concerns Free” card. I’ll get into those in the last part of this essay.

If you think that means I shouldn’t have even run this experiment, I get it. But there’s no way to test the increasingly extravagant claims for AI besides, well, testing those claims. Critics of AI sometimes implicitly take the claims of the boostiest of AI boosters at face value, proceeding under the assumption that, yes, AI is on the verge of replacing novelists and screenwriters and artists and filmmakers. If those assumptions are wrong, that has big implications for both boosters and critics alike.

So what is this?

Claude-Author is an open-source project that “utilizes a chain of Anthropic and Stable Diffusion API calls to generate an original novel.” (The original project used GPT-4 and specified “fantasy novel,” but the most recent version uses Anthropic’s Claude 3 and drops internal prompts that limit it to fantasy.) According to its README,

The AI is asked to generate a list of potential plots based on a given prompt. It then selects the most engaging plot, improves upon it, and extracts a title. After that, it generates a detailed storyline with a specified number of chapters, and then tries to improve upon that storyline. Each chapter is then individually written by the AI, following the plot and taking into account the content of previous chapters. Finally, a prompt to design the cover art is generated, and the cover is created. Finally, it’s all pulled together, and the novel is compiled into an EPUB file.

This is the sort of promise that gives AI buffs thrills and novelists hives. Scammers have filled Amazon’s ebook store with nonsense for years; that’s not a subjective dig at the quality of self-published books, but a statement of fact. So it’s not surprising that scammers have embraced AI, and that’s before AI promised to actually be good at it.

So how good is it?

Getting Claude-Author running

As with many science projects of late, Claude-Author runs in JupyterLab (née Jupyter Notebook), an interactive Python environment. As written, the code lacks any error handling; I added some to help debug repeated crashes. (It kept exceeding token limits in the Anthropic API.) Prompts get converted to probabilistic tokens, numeric representations of words or “subwords.” The LLM also uses tokens for generating its output. I also separated out the steps so I could view the generated outline and cover art prompt.

Claude charges by the token, both in and out. Claude 3 “Haiku,” the model Claude-Author uses, has a rate limit of 50,000 tokens per minute at the cheapest paid tier. The prompt for each chapter consists of the user prompts, the generated outline, and every previously-written chapter. With about 4,000 tokens used per chapter and a few thousand more for the outline and cover art, you’re going to run out of tokens by 12 or 13 chapters on the cheapest paid tier. This is not a documented limitation. Being a simple wrapper script doesn’t fully excuse its bugs.

Book 1: The Elven Crown

So, my first prompt:

- Plot summary: An elf princess finds herself made the queen after her mother (the current queen) and her sisters are killed in a suspicious accident. She must learn to become a good ruler while navigating court political intrigue, dealing with rivals to the throne who would like to see her removed, and solving the mystery of the accident that killed her family.

- Writing style: literate but still friendly and conversational.

- Chapters: 12.

You may recognize this as a gender-swapped version of The Goblin Emperor, an acclaimed fantasy of manners novel—basically, court intrigue in a fantastic setting—from a decade back.

The outline Claude wrote for itself, which it called The Elven Crown: Ascent of Queen Elara, was a list of bullet points, optimistically specifying how many pages each chapter should be. Here’s the first two chapters:

Chapter 1 (10 pages)

- Introduce Elara, the young elf princess, as the story’s protagonist. Describe her personality - intelligent, caring, a bit shy and unsure of herself. She lives a comfortable life in the royal palace, but longs for more adventure and responsibility.

- Set the scene of the royal palace and the kingdom Elara lives in. Describe the luxurious surroundings and the daily life of the royal family.

- Foreshadow some tension and political intrigue brewing beneath the surface, with mentions of Elara’s mother (the Queen) being stressed and preoccupied lately.

- End the chapter with the shocking news that the Queen and Elara’s sisters have been killed in a mysterious carriage accident. Elara is now the sole heir to the throne.

Chapter 2 (10 pages)

- Elara is reeling from the sudden loss of her family. Describe her grief and the funeral proceedings. She is overwhelmed at the thought of becoming Queen.

- Introduce some of the key members of the royal court - the High Council, the Captain of the Guard, the Royal Advisor, etc. They offer their condolences but also start subtly vying for Elara’s favor and trust.

- Elara meets with the High Council, who inform her that she must be crowned Queen immediately, per the laws of succession. She is unsure and scared, but accepts her new role.

In the following chapters (according to the outline), the new queen “has a strange dream/vision that seems to be a warning about a dark force threatening the kingdom.” Chapter 4 introduces a “potential romantic interest, perhaps a handsome young member of the royal guard or a visiting diplomat.” After “strange occurrences and accidents start happening around the palace,” a mysterious cloaked figure who’s been watching her warns her about an impending threat and urges her to flee the palace for her own safety. She agrees (because why not), ducking the royal guards and traveling “incognito through the kingdom,” staying one step ahead of pursuers. A band of rebel fighters agree to help her sneak back into the palace, but their leader warns her about “powerful forces at work, with deep roots in the kingdom’s history.” Eventually, she returns, confronting “the true mastermind behind the plot against Elara’s family, perhaps a disgruntled member of the High Council or a rival noble family.” A battle ensues. “Just when all seems lost,” her cloaked ally reappears, “joining the fight and turning the tide.” The last chapters find her “rooting out corruption and restoring trust in the kingdom’s institutions,” and the cloaked figure is “revealed to be a powerful sorcerer who has been guiding and protecting Elara all along.” The romantic subplot comes to a resolution.

So, it’s like that Disney film where the princess has to leave home to Learn a Lesson before returning triumphantly (you know the one), with a generous dusting of Discount Tolkien. The biggest problem with the outline as it stands, besides the remarkable lack of originality, is that Elara has no character arc beyond moving from unsure she’s ready to be a queen to being confident she can be. What does she want, besides not screwing things up? What does she need to learn? What does it take to succeed?

Even so, a good writer could take this outline and come up with, well, something from it. Let’s read the actual opening!

Elara gazed out the ornate window of her bedchamber, her emerald eyes scanning the lush gardens and towering spires of the royal palace. Though she had lived here all her life, the grand, gilded halls and meticulously manicured grounds had never felt like a true home. There was an emptiness, a disconnect, that nagged at her constantly.

At 18 years of age, Elara was the youngest of three princesses - a position that afforded her every comfort and luxury imaginable, yet left her feeling isolated and unfulfilled. While her elder sisters reveled in the pomp and privilege of court life, Elara longed for adventure and the freedom to explore the world beyond the palace walls.

She sighed, her slender fingers tracing the intricate patterns etched into the windowsill. Elara knew her role was to one day succeed her mother, Queen Alaria, as ruler of the kingdom of Valeria. But the weight of that responsibility filled her with a sense of dread rather than pride. Was she truly ready to bear the burden of an entire realm on her shoulders?

A soft knock at the door interrupted Elara’s pensive musings. “Princess? Your mother requests your presence in the throne room.” It was Mara, her most trusted handmaiden.

Elara straightened her posture, smoothing the delicate folds of her ivory gown. “Thank you, Mara. I shall be there momentarily.”

“Show, don’t tell” may be over-prescribed as basic writing advice, but this opening is the platonic archetype of why that advice exists. We’re told everything and shown nothing. Beyond that, note the utter lack of concrete imagery: we’re told the royal palace has gilded halls, manicured grounds, and towering spires, but what do they look like?

“Ah, Elara. Thank you for joining us,” Queen Alaria said, her voice betraying a hint of strain. “Please, come and stand with your sisters.”

Elara obediently took her place between Aeyla and Celeste, acutely aware of the numerous pairs of eyes watching her every move. The members of the High Council, the kingdom’s most powerful advisors, were gathered in a semicircle around the throne, their expressions unreadable.

“My daughters,” the Queen began, her gaze sweeping across the three princesses. “I have summoned you all here today because… because I have grave news.” She paused, her lips trembling slightly. “Your father, the King, has been killed in a terrible accident.”

Oh, no! The king! *record-scratch* Wait. My prompt said the queen and the princess’s sisters had been killed. What happened to the king might need to become backstory, but if the queen is still alive, she takes over, not Elara. (Unless the country doesn’t let women be rulers, but that ends the story right here too, doesn’t it.)

As the Queen dismissed the Council and the sisters were ushered out of the throne room, Elara couldn’t help but feel a growing sense of foreboding. Something was not right, she could feel it in the air. The kingdom she had known all her life was on the precipice of a great upheaval, and she was powerless to stop it.

That night, as Elara lay awake in her bed, haunted by the day’s events, she couldn’t shake the feeling that her life was about to change forever. The weight of the crown loomed over her, a burden she was not sure she was ready to bear. But with her father gone and her mother’s health visibly deteriorating, Elara knew she had no choice.

She would have to find the strength within herself to become the Queen Valeria needed, even if it meant sacrificing her own dreams and desires. For the sake of her family, her people, and the kingdom she had sworn to protect, Elara would have to rise to the occasion.

Little did she know, the challenges that lay ahead would test her resolve in ways she could scarcely imagine. The future of Valeria hung in the balance, and Elara’s very survival would soon be at stake.

Okay, Claude simply isn’t following its own outline, is it? It never described the daily life of the royal family, or mentioned the Queen being stressed and preoccupied. The chapter didn’t end with the news of the Queen King’s death. And again, those last few paragraphs are cliché after cliché with no specificity.

Chapter 2

The morning sun peeked through the ornate windows of the royal palace, casting a warm glow over the solemn proceedings taking place within. Elara stood beside the grand, gilded caskets that held the lifeless forms of her mother and sisters, her emerald eyes glistening with unshed tears.

She had spent the past two days in a fog of disbelief, her mind struggling to comprehend the devastating turn of events that had robbed her of her family. One moment, they had been alive and well, and the next… gone, taken from her in the blink of an eye.

Surprise! Back to the original outline: the King goes unmentioned, and the Queen and Alara’s sisters are dead.

A soft knock at the door interrupted her pensive musings. “Your Majesty?” It was Mara, her most trusted handmaiden. “Forgive the intrusion, but there is a matter that requires your attention.”

Wasn’t Mara introduced in Chapter 1 with the same phrasing? Why, yes, she was. Claude will introduce her again in Chapter 3 with exactly the same paragraph as above. And again in Chapter 4.

I could continue fisking The Elven Crown, showing how it repeats not just phrases and actions but whole entire scenes, sometimes three or four times, but you get the idea. We’re told Elara is “determined to emerge stronger, wiser, and more resolute” and has “a renewed sense of focus and determination” and is “determined to be the beacon of hope and stability that her people so desperately needed” within four paragraphs, because (within the same short passage) “she had been forged in the crucible of her family’s tragedy.” No, she hasn’t, because it’s only chapter four. The novel is about that crucible, how it forges her. The phrase “the weight of the crown” occurs eight times in the text, usually as “the weight of the crown never felt heavier.” Did you know the crown is heavy? It’s a metaphor! Get it? A big stinkin’ hoary cliché of a metaphor that Claude’s infallible calculations predict you want to read at least once a chapter!

Like I said, this isn’t spicy autocomplete as much as galaxy-brain Mad Libs. Claude generates phrases, paragraphs, and even whole scenes paraphrased from a vast library of public domain fantasy stories and far vaster library of copyrighted fiction scraped off the web. Anyway, that’s why the plot has this shape (the most common kind of princess story is, obviously, a Disney Princess® story), why there is a mysterious cloaked figure, why we have sorcerers, why the outline ends with a “Scouring of the Shire” vibe. Claude-Author is generating text that matches a statistically median elf princess novel.

There is no romantic interest introduced, ever. The cloaked figure introduced in chapter 5 is her most trusted advisor, Lord Everett, but later on the figure is magically the rebel leader. The text refers to the “accidents” the outline says were happening around the palace, but we never know what they are. (We never know what the “unrest” among the people is, either.) Chapter 5 ends in the middle of a sentence, a bug that continues from here on out. Chapter 6 ends in the middle of the same sentence, in fact.

The real villains are sorcerers in an evil cabal, rather than a rival family (dispensing with the one part of the outline that actually followed the prompt: we asked for a novel of political intrigue, remember?). They’re revealed by Everett first, then by the rebel leader as if the first revelation never happened. (Twice.) Elara goes to meet the rebels, who are “working to undermine the forces that seek to destroy [Elara’s] kingdom,” because Claude does not know what rebels do. She returns to the castle with the rebels. Everett is revealed to be conspiring with the sorcerers! Elara is, with no foreshadowing, a master swordswoman. (Claude does not do foreshadowing, at all.)

We seesaw back and forth around the outline. Scenes repeat themselves near-verbatim with reckless abandon. Everett switches sides. Another cloaked figure who is not Everett or the rebel leader appears, giving a variant on a speech we’ve read at least three times by now about dark conspiracies and sorcerers and blah blah blah. The novel ends not with Elara but the new mysterious cloaked figure saving the day:

Seizing the moment, the cloaked figure launched a barrage of arcane energies, the raw power of their sorcery slamming into the sorcerer’s weakened frame. The towering figure let out a bone-chilling scream as they were engulfed in a blinding flash of light, the very air crackling with the intensity of the magical onslaught.

When the light finally faded, the sorcerer’s lifeless form crumpled to the ground, their dark power dissipating like a wisp of smoke in the wind. Elara felt a surge of triumph and relief wash over her, her heart pounding in her chest as she surveyed the

Yes, it ends mid-sentence. Don’t worry, though—I’m sure everyone lived Happily Ever

Stable Diffusion created this cover prompt:

The cover features a regal portrait of Elara, adorned in her royal attire and standing tall with a determined expression. In the background, the silhouette of the royal palace looms, with shadowy figures lurking in the foreground, hinting at the dark forces conspiring against her.

As you can see, it decided to just make Elara the shadowy threatening figure. There are also a few typical GenAI bugs (e.g., the moon isn’t actually round).

Book 2: The Elf Queen’s Ascension

I lowered the number of tokens down to 3200 from 4000 to try and create a longer book. Bzzt. Instead, it lowered the word count of each chapter. (Chapter lengths tend to grow as the book gets longer. That might account for the broken sentences, but Claude 3 has a 200,000-token context window—that is, the size of text that it should be able to fully consider in prompts—so it shouldn’t be.)

An old truism in writing classes is that if you give twenty students the same prompt, you’ll get twenty different stories. I gave Claude-Author the same prompt the next day, assuming this would be true for it, too. Surprise! The details weren’t identical, but they were damn close, down to naming the main character “Alara” instead of “Elara.” It’s still full of clichés. The phrase “the weight of the crown” doesn’t appear eight times in the text, it appears 45 times, usually as “the weight of the crown pressed down upon her.” The plot still involves trusted advisors, sorcerers, leaving the palace, making a triumphant return, and mysterious cloaked figures who undermine the protagonist’s agency, so I won’t spend much time on it.

The Elven Crown never actually uses the word “elf” or, but for the title, “elven”; The Elf Queen’s Ascension at least pays lip service to the notion that these are, you know, elves. This time Claude generated chapter titles, and boy, they are not good!

- The Burden of the Crown

- The Burden of Sovereignty

- Whispers of the Past

- The Burden of Destiny

- Whispers in the Shadows

- Whispers from the Shadows

- The Gathering Storm

- “The Unveiling of a Dark Conspiracy”

- The Awakening

- Forging Alliances

- The Crucible of Battle

- The Awakening of Ancient Power

- The Guardians of the Darkness

- The Reckoning

- “Rebuilding the Elven Realm”

- “A New Dawn for the Elven Realm”

(I am pretty sure I played several of these as D&D modules.)

Why are some in quotes and some not? I don’t know, but they appear that way in the generated ePub file. So do different chapter titles, because Claude generated them both for an internal “make chapter titles” prompt (which didn’t work on the first novel) and for each chapter anyway (which it didn’t bother with before). So, Chapter 2 is both “The Burden of Sovereignty” and “The Weight of the Crown” (drink!), while Chapter 13 is both “The Guardians of the Darkness” and “The Descent into Darkness.”

The sole exception is Chapter 17. Wait, what? The outline was for 16 chapters, but it wrote a Chapter 17 anyway, even though Chapter 16 is the actual ending. The chapter titles for Chapter 17 are, for the record, “Echoes of the Past” and—I swear to God—“Title for Last Chapter.”

By the way, the prompt specified 18 chapters.

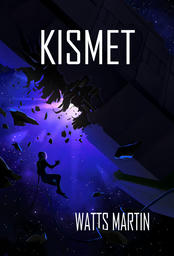

Book 3: Not Kismet

For the last experiment, I went bonkers: I asked for a version of my science fiction novel, Kismet. Here’s the prompt:

- A salvager who lives aboard her spaceship is wrongly accused of stealing valuable data from a wreck. As she tries to clear her name by recovering the data from whoever really stole it, she learns that a terrorist group that hates totemics, humans like herself modified to have animal characteristics, is behind it—the group that killed her mother years before.

- Writing style: third-person and present tense, using modern language with a literate, stream-of-consciousness vibe

- Chapters: 15

The real Kismet has more to it—Gail, the protagonist, has to grapple with what “home” and “family” mean to her in a way that she’s been avoiding for decades, and possibly ironically, questions about artificial intelligence surface—but it hits the main plot points and offers enough to work out a thematic hook.

Claude wrote this outline with paragraphs rather than bullet points. Here’s the first few:

Chapter 1 (10 pages)

The story opens with Kira, a talented salvager who lives aboard her spaceship, the Scavenger’s Delight. She is haunted by the memory of her mother’s death at the hands of an anti-totemic terrorist group years ago. As she scavenges through the wreckage of an abandoned ship, Kira discovers a cache of valuable data. But before she can claim it, security forces arrive and accuse her of theft, confiscating the data. Kira protests her innocence, but the authorities refuse to listen.

Chapter 2 (10 pages)

Dejected, Kira returns to her ship, pondering her next move. She suspects the data was stolen by someone else and planted on her ship to frame her. Determined to clear her name, Kira begins investigating the incident, using her extensive contacts in the salvage community to gather information. She learns that the data contained sensitive information about a classified military project.

Chapter 3 (10 pages)

Kira’s inquiries lead her to a shady information broker named Xander, who claims to have information about the real thieves. He agrees to share what he knows, but only if Kira helps him with a dangerous job. Reluctantly, she agrees, and the two set out on a mission to infiltrate a heavily guarded research facility.

This is already a little weird—she’s accused of theft after finding the data in the wreck, then in the next chapter she thinks it was planted on her ship to frame her. Huh? The outline goes on to have Kira and Xander discover “the Purity Crusaders, a fanatical anti-totemic organization,” run the facility; they plan to use the stolen data to develop “a weapon that can target and disable totemics.” This is not far off from my real story, even getting close to the name I used for the bad guys! That’s about it for similarities, though. They find more conspiracies, Kira “becomes increasingly convinced that the group is responsible for her mother’s death” (which my protagonist knew from the start, because duh), and it gets more and more action-y and Star Wars-y as it goes on. They introduce a hacker and a former member of the Purity Crusaders who helps them. It also keeps going past the final confrontation to have another Scouring of the Shire style denouement. Claude-Author doesn’t do anything with the idea that totemics have animal characteristics, and indeed seems to have forgotten that part of the prompt.

Here’s the opening of (sigh) Scavenger’s Crusade:

Kira’s fingers glide across the control panel of her ship, the Scavenger’s Delight, as she navigates the treacherous debris field. Her eyes scan the wreckage, searching for any salvageable parts or valuable data that could fetch a decent price on the black market. This is her life – a constant dance with the unknown, a high-stakes game of finding and claiming what others have discarded.

As she maneuvers the Scavenger’s Delight through the maze of twisted metal and shattered hulls, Kira can’t help but be reminded of the day her mother died. The memory haunts her, a scar that refuses to heal. She remembers the terror in her mother’s eyes, the desperate pleas for help that went unanswered. Kira had been powerless to save her, and that failure has weighed heavily on her ever since.

Shaking off the dark thoughts, Kira focuses on the task at hand. She spots a partially intact data core and guides her ship closer, maneuvering with the precision of a seasoned pilot. Her hands move with practiced ease as she deploys the salvage arm, carefully extracting the valuable component.

“Jackpot,” Kira murmurs, a small smile tugging at the corners of her lips. This data core could be worth a small fortune, enough to keep her ship fueled and her supplies stocked for the next few months. She begins the process of securing the salvage, her mind already racing with the possibilities.

But just as Kira is about to disengage the salvage arm, a sudden flare of activity on her sensors catches her attention. She tightens her grip on the controls, her heart pounding as she recognizes the telltale signature of security forces. Cursing under her breath, Kira knows she needs to act fast.

This is how Kismet opens:

The call that shatters Gail’s life comes disguised as a gift from her past. It starts the way all of them have for the last fourteen years: a beedle boop and her ship’s voice, a pleasant female contralto, sounding directly in her left ear. “You have an incoming call.”

She’s walking outside, or at least what she thinks of as “outside” here, heading for inside. Kingston’s temperature stays at thirty-one degrees during its day cycle to make it more like its equatorial Earth namesake, but couldn’t they have improved on the climate instead of slavishly emulating it? If she were cisform it might be tolerable, but as a totemic, it’s crazy-making hot.

Kis should have said who it was, but it’s got to be Dan again, with something else she can do while she’s still on Kingston. Hey, it’ll be quick and you’ll only need to buy a couple parts and you know I’ll pay you back as soon as I get back on my feet.

Right. Dan owes her twenty-four thousand three hundred and counting, and she doesn’t have to pull that number up on her HUD to verify it. It’s burned into her memory right now. So’s another number: the payment she got just this morning from Smith and Sons Salvage, undervaluing her last haul by ninety percent. They’ll drag her appeal out for months, and her budget is already on fumes.

She sighs. “Kis, tell Dan I can’t—no. Tell him he can go fuck himself with—”

Claude-Author speed runs through its setup. Five paragraphs in, Kira has the data core in her possession with security forces chasing her, while the junk she’s salvaging inexplicably reminds her of the day her mother died. Once more it deviates from both the prompt and its own outline: both specify a wreck, singular, which is not what Claude’s first chapter describes. The security forces scare Kira off from retrieving the “data core”; they never accuse her of theft or confiscate it. In the next chapter, she’s sure the data core is somehow a setup, but that doesn’t fit what Claude actually wrote. It’s as if it’s missing its own story beats, then insisting “no, I didn’t, they were there all along.”

But what’s Kira’s personality? What’s her setting like? Her current situation? Five paragraphs in to my actual book, you’ve got hints of that with Gail. The very first sentence foreshadows both the call she’s receiving and what it leads to. You don’t know any of that with Kira.

And you never will, because Claude-Author didn’t do any world-building (and if you asked, it wouldn’t follow it anyway). As elf princesses Alara and Elara before her, Kira never shows distinct personality—she’s the default setting from the scrappy action heroine factory. No other characters are totemics. We never learn anything about Kira’s mother, what she believed in, how she died. Kira never truly grapples with her past, because she has no backstory apart from “the bad guys killed her mom.”

Beyond that, the generated novel has the same issues The Elven Crown does. The same cliché-fest, the same tell-don’t-show, the same generality over specificity. Phrases, paragraphs, and scenes repeat themselves. Toward the end of the novel, it piles on conspiracies in a way that becomes comical:

Talia’s voice crackles over the comm, her tone urgent. “Kira, I’ve found something else. It looks like the Crusaders have been funneling resources and intelligence to a shadowy organization called the Purifiers.”

Kira’s brow furrows, her mind racing with the implications. “The Purifiers? Who are they?”

(…14 paragraphs later…)

Talia’s voice crackles over the comm, her tone urgent. “Kira, I think I’ve found something else. It looks like the Crusaders and the Purifiers have been funneling resources and intelligence to a high-ranking military officer named General Tarkus.”

Kira’s brow furrows, her mind racing with the implications. “Tarkus? Wasn’t he one of the key figures behind the development of the weapon we stopped?”

(…6 paragraphs later…)

Talia’s voice crackles over the comm, her tone urgent. “Kira, I’ve found something else. It looks like the Crusaders and the Purifiers have been funneling resources and intelligence to a shadowy organization called the Synthesis.”

Kira’s brow furrows, her mind racing with the implications. “The Synthesis? Who are they?”

General Tarkus is never mentioned before or again, unless you count that this scene appears verbatim twice. Was the LLM plotting his return in a scene it failed to write because it ran out of tokens—or was it merely generating words statistically similar to Star Wars? One’s mind races with the implications!

Throughout Claude’s generated novel, the battles get bigger, but Kira’s personal stakes never change. She doesn’t grow, she doesn’t learn anything (the names of shadowy organizations do not count for character purposes, sorry), she never screws up in ways that have consequences, she’s never faced with heart-wrenching choices.

The cover prompt generated for Scavenger’s Crusade was:

The cover should feature Kira, the protagonist, amidst the wreckage of spaceships and debris, conveying the salvage and sci-fi elements of the story. The color palette should be a mix of muted tones and bold highlights, creating a sense of gritty realism and high-stakes adventure.

Here’s the generated cover:

This image gets weirder the longer you look at it. I’ll give the “debris” a grudging pass, but why is it on fire? Is it actually her boot on fire? What is she holding in her hand? Why does the space between her legs not match the rest of the background? Does one of those legs disappear below the knee? Does the other leg have two knees?

These are the two cover variants for the real Kismet, by the way, both by artist Teagan Gavet. Gavet’s art far more clearly conveys the idea of a wrecked (singular) spaceship, and in the second one, Gail is clearly an animal-human hybrid. (Rat. Thanks for asking.)

So how good is AI at creative writing?

It’s not. It’s really bad, you guys. People who think AI-generated fiction is “shockingly good,” or even readable, are not people who care about fiction.

(This does not imply it’s useless as a creative writing tool, although that comes with caveats. We’ll come back to that.)

A few anticipated objections

You should have used GPT-4, not Claude! Claude-Author creator Matt Shumer appears to disagree with you: the scripts he wrote used GPT-4 originally.

The repository includes example output from the GPT-4 version, though, and it’s not better. It doesn’t have the repeated scene bug, but its plotting is even less coherent. Seemingly important characters are never returned to. Chapter and even book titles sound almost random: in The Awakening of Eldoria’s Last Legend, no legend is ever described, and the last chapter is “Ascendancy of the Last Arcanum,” a term never used until then. Beyond that, it has all of Claude-Author’s foibles: no character arcs, no themes, no specificity in its imagery, all-you-can-eat clichés.

Claude “Haiku” is the smallest Claude model. You should have used a bigger one! This is the model Mr. Shumer chose for Claude-Author, so it’s the one I stuck with. “Sonnet” costs twelve times as much to use as Haiku does, and the best one, “Opus,” is sixty times the cost. I’m not dedicated enough to this experiment to pay that much. I didn’t want this to go completely untested, though, so I used Claude’s chat interface—which uses the Sonnet model—with the same prompts that Claude-Author would use to generate an outline for the elf princess story.

It was comparable to Claude’s other outlines, and even reused two chapter titles, “Whispers in the Shadows” and “The Gathering Storm.” So far, not so good. I cajoled it into writing the first three chapters, and hey! Sonnet’s writing is free of the scene-repeating bug, and it’s competent enough you could almost believe it might develop a style. A LaCroix of style, if you will.

But it’s not all artificial sunshine and robot roses. The princess is again named Alara, again has emerald eyes, and is again in an action story. The outline isn’t far off from the others it generated, and when it’s different it’s not better. The “accident” is a monster that kills her family on a hunting trip, which wasn’t in the outline, yet the third chapter seems to forget that. Also, the palace has an “infirmary wing,” which seems odd for a fantasy story. It’s as if Claude doesn’t actually understand genre, but knows that statistically speaking, injured people in stories often wake up in infirmary wings!

So: technically more readable, but my final grade remains the same.

GPT-5, Claude 4, and so on will be even better! Maybe, but we can only judge the AI we have. And as I’ll elaborate on later, these problems aren’t solved by bigger, faster models.

A final grade

Writing mechanics: Good! Claude-Author (and even GPT-Author) is decent, and if you’re willing to spend on Claude Sonnet, it might keep you reading a while.

Story structure: Bad. The outlines it produces are over-generalized and lack understanding of theme, character, and subtext. And it doesn’t follow them anyway. Even when it does, it does a poor job with rising tension beyond “wait, we’ve found another bad guy who wasn’t in the outline!”, and has no idea what foreshadowing is.

Prose style: Barf. Practically every phrase is a cliché, either lifted directly or remixed from text on the web. The dialog from every character is samey-samey, and nobody has a personality defined beyond the prose repeating one or two characteristics it’s locked onto. (Elara, both Alaras, and Kira are so determined. Determined to show you their determination, determinedly. Determined!)

Reasoning about machine reasoning

When we ask whether computers can reason, we need to examine what we mean. We’ve been teaching computers how to make inferences and draw conclusions for decades:

mortal(X) :-

human(X).

human(socrates).

That’s Prolog, an AI language that dates back to the early 1970s, expressing the syllogism all humans are mortal. Socrates is a human. Can Prolog reason out therefore, Socrates is mortal? If you were at a Prolog prompt, you could query it and get a response:

> ?- mortal(socrates).

yes

Ta-da!

LLMs certainly appear to reason the same way that Prolog can. Sure, what’s going on behind the scenes is entirely different, but when the result is the same, should you actually care about the process?

Yes. You should.

> There is a basket on the table in the living room, and a plum in the basket.

> What room is the plum in?

In the context of the classic board game 'Clue,' the character Professor Plum

can be in any of the rooms on the game board.

This silly exchange with ChatGPT (GPT-4) is real, but the catalyst was operator error: the first line didn’t get sent to the LLM. If it had, it’d have given a correct answer. Yet it’s still illustrative that it arbitrarily picked a context for the question and proceeded to answer anyway. This is what AI researchers gently term “hallucinating,” because the more honest description of “bullshitting” does not win you big VC money.

Why do LLMs bullshit? Because, to beat on this drum one last time, LLMs are performing lexical analysis and interpolation between words represented as points in vector space. Points that are closer together in that space map to words with greater semantic similarity. The “rules” an LLM follows are vector math operations; the rules Prolog-style systems follow are logic operations. So Prolog “knows” that it can’t answer the question “what room is the plum in” without more information. An LLM doesn’t. The vector math always arrives at an answer. Training the model to say “sorry, I don’t know that” isn’t impossible, but it’s difficult, and compared to the directness of Prolog, unreliable.

Lastly, our novel-writing exercise strongly suggests that the longer the LLM input and output are, the more likely they are to break down. The prompts Claude-Author created clearly did guide the output step by step, but Claude couldn’t follow a roadmap that detailed. It became visibly buggy as the prompts hit tens of thousands of words, but it didn’t follow them even in its opening chapters. Yes, I had subjective style complaints, but here, I’m talking about the objective flaws ranging from not following the input prompt correctly to repeating output text multiple times verbatim to producing incomplete sentences.

You might object that more computing power naturally solves this. But again, all the Claude 3 models, including Haiku, can handle 200,000 tokens in its context window, and GPT-4 can handle 128,000. At least in theory, these models currently have the power level where they can handle everything the Claude-Author script feeds them. In practice, they clearly aren’t handling it well.

Okay, but so what?

Does anyone really expect generative AI systems to write the next Great American Novel? And even if they can’t, that doesn’t mean they won’t be dazzlingly perfect in all sorts of other use cases, right?

Well, first off, many AI boosters do expect generative AI systems to get that good. Many apparently believe that they’re already that good; Claude-Author’s creator, Matt Shumer, runs a company, HyperWrite, whose entire premise is that AI can “deliver high-quality writing in less time, from first draft to final edits.” There’s another program called Sudowrite which promises to “write 1,000s of words in your style.” Based on my experimentation, both with the opening of Kismet and with their own provided sample of The Da Vinci Code, it cannot write in either my style or Dan Brown’s. The people who were enthusiastic about Claude-Author’s announcement on X (Twitter) and GitHub were mostly people revved up to have it write novels for them.

And there are certainly authors watching what’s happening to the market for commercial art and worrying that AI is coming for them, too. In a recent (January 2024) survey by the Society of Authors,

- A quarter of illustrators and over a third of translators surveyed said that they’ve lost work to GenAI.

- A third of illustrators and over 4 in 10 translators say GenAI has decreased the income they’re getting when they do get work.

- Nearly two-thirds of fiction writers and over half of non-fiction writers believe GenAI is going to hit their income.

At this point, it strikes me as unlikely that generative AI will ever do creative writing well, but that’s slim comfort. I’ve made my living for a decade as a technical writer, and while I don’t think GenAI will be good at that, either, companies will push to treat it as good enough. And people who do not, in fact, care about reading, from solo scramtrepreneurs to bottom-feeding publishers and studios, will push hard for AI-generated shovelware. I’d bet money there are shitty broken books “written” by Claude-Author already filling Amazon’s virtual shelves and sitting in submission queues at unsuspecting major publishers.

Beyond that, we have to ask whether there’s any reason to think the quirks and errors we’ve seen here are unique to long-form creative writing. If an LLM can’t follow a novel outline when producing its output, can we expect it to follow anything else comparably complex? If it can’t reliably look at the last five chapters it wrote and produce chapter number six without deviating from the series or producing gibberish, can we expect it to be more reliable with any other kind of serial content?

And what happens when the content it’s generating isn’t a novel about a determined elf princess, but an operation manual for dangerous equipment? A set of hiring recommendations? A medical diagnosis?

There’s got to be some positives, right?

Eh? I guess?

While I don’t love the outline GPT 3.5 created for the detective story in the first part or the outlines Claude-Author made, you could workshop any of them into something better. More generally, LLMs could help with brainstorming, help get you past writer’s block, and help with outlining. Earlier, I mentioned Subtxt with Muse, a specialized writing app that incorporates the Dramatica narrative theory into its training. This use case renders GenAI’s particular failure modes largely inconsequential: Muse analyzes your ideas using Dramatica’s rule-based story form model, which shapes its responses into specific, meaningful guidance. If you’re down with the quirky way Dramatica looks at story structure Dramatica offers a curious mix of deep insight that other story structure approaches lack, clever but debatably actionable hierarchies of story elements, and a weirdly essentialist insistence on calling a character’s problem-solving style their “mental sex.” (and comfortable with Subtxt’s steep subscription cost) it might be for you.

And I’d still love that “local author search function” idea I mentioned in the first part. As someone who’s honestly pretty bad at remembering physical details of my characters, I’d love to type what color are Gail’s eyes again? and get the correct answer of green. (This is not a new idea; academic-focused word processor Nota Bene has had Orbis, a “free-form text retrieval system,” for about forty years.) But what I wouldn’t love is a search function which, if it can’t find the answer, confidently tells me Gail’s eyes are blue. Using an LLM as a natural-language front end to an Orbis-like system sounds good, but letting the LLM actually perform the search and return the result, trusting it to return “not found” rather than making an answer up? Not so good.

Of course, both of those positive uses come with those other caveats that all the AI skeptics keep harping on, because:

The caveats the AI skeptics keep harping on matter

You know the ones: whether the training corpus of all publicly available LLMs use copyrighted work without permission in ways that don’t pass a “fair use” exception; the enormous environmental costs AI is starting to exact as it ramps up; how generative AI is already used to devalue creative work. As Harry “Hbomberguy” Brewis wrote,

If people were frank about their use of AI and also if we lived in a post-capitalist utopia, AI would be a very interesting experiment. I would love to see what people do with it. People talk about “prompt-mancy,” where they learn how to write the exact line of text to make something really interesting come out of this machine. I wish we could enjoy it on that level without in the back of our minds going, This is going to put so many people out of a job. So many other people’s work was stolen, unpaid, for it to be able to make this.

The idea of using GenAI for brainstorming and outlining is cool! So is the idea of that local author search function. Yet in practice, anything utilizing existing, popular models inherits the ethical concerns and legal liabilities facing those models. Your AI-powered muse is well-trained enough not to spit out near-verbatim paragraphs from Harry Potter and the Billionaire Transphobe when given a sufficiently crafty prompt? Great, but if the courts rule OpenAI’s training doesn’t meet the fair use test and your system is built on top of GPT-4, you still got trouble (with a capital “T” and that rhymes with “C” and that stands for Copyright). Even if the AI companies successfully outwit the legal challenges, the ethical challenges won’t disappear. We do not live in that post-capitalist utopia.

As I watch Google, Microsoft, and Apple rush headlong toward AI all the things, I can’t help but notice the other elephant in the room. See it? It looks photorealistic at first glance, until you notice that the background in the space between its legs doesn’t quite match the rest of the background, and its trunk may have two and a half nostrils. Yes, that one. Here’s the thing. Is “this natural language web search makes something up three or four percent of the time” an improvement over original Google? Is asking for a list of citations that you still have to manually check a true time-saver? Is quickly generating code with a hidden bug which will bring down your production server if you don’t catch it actually super helpful?

Is the environmental cost worth it?

Is the cost to independent creators worth it?

And do we actually want systems which, no matter how fast and advanced they get, can definitionally never produce work better than the statistical median to be writing—or even substantively contributing to—our presentations, white papers, documentation, art, music, and, yes, novels?

I can only conclude with the immortal words of Claude-Author: the weight of the crown never felt heavier.

To support my writing, throw me a tip on Ko-fi.com!

©

2024

Watts Martin

·

License: CC BY-NC-SA 4.0

Contact: Mastodon ·

Bluesky ·

Email

![]()